In cloud computing, the data collected by edge devices — like security cameras, sensors on industrial machines, and IoT devices — or created by end-users needs to be transmitted to a cloud or on-premise data center for processing. In that context, edge data is data created by these edge computing processes that occur at or near the end-user or the source of the data.

Resilio is a real-time data sync and replication system that can transfer payloads of any size and type from the hardest-to-reach places to any other destination. Click here to learn more about Resilio’s edge ingest solution. Or, if you want to learn exactly how our solution can benefit your business, schedule a demo with our team.

Edge computing complements cloud computing by storing and processing some data locally on the edge device or on a nearby edge server. This has the benefits of:

- Reducing latency.

- Improving response times for edge applications.

- Reducing the strain on the network and central cloud servers (which makes it easier to scale).

It also helps organizations avoid using networks at the edge, which are known to be low-quality and unreliable.

In this guide, we’ll explore how edge computing and edge data processing work by covering the following:

- What edge data and edge computing are

- How the edge computing model works

- 4 edge computing use cases

- How Resilio enhances edge applications

We’ll also show you how our file synchronization software system, Resilio, can enhance the benefits and alleviate the challenges of edge computing — such as reliably transferring over edge networks, managing complex edge environments, and keeping edge data secure.

Resilio is a real-time data replication and synchronization software system that is particularly well-suited to edge applications by collecting and controlling data at the extreme edge.

Resilio runs everywhere — on ships, trucks, and railroad locomotives, even alongside railroad tracks. Resilio gets the most up-to-date mapping information in the hands of CAL FIRE smokejumpers (i.e., remote wildland firefighters). The common thread among our customers is the mission-critical and life-critical nature of their data: people making real-time decisions depend on the most current and accurate information.

Resilio enables operators to securely transfer payloads of any size and type from the hardest-to-reach places to any other destination—using any network and device. The solution overcomes extreme latency and loss for reliable use across VSAT, cell, radio, Wi-FI, and intermittent connections to transfer data in fixed and predictable time frames.

Overall, Resilio is the most reliable and efficient transfer solution for edge deployments because it provides:

- WAN acceleration: Resilio uses a proprietary UDP-based WAN acceleration protocol that enables it to fully utilize the bandwidth of any network in order to maximize transfer speed and efficiency. It allows you to transfer data in predictable time frames over VSAT, cell, Wi-Fi, and any IP connection. Resilio’s WAN protocol overcomes extreme latency and loss, enabling many organizations to use it to reliably transfer and sync data at the far edge (e.g., at sea, in communities with underdeveloped network infrastructure, etc.).

- P2P replication: Resilio’s peer-to-peer replication architecture enables every endpoint to share data with any other endpoint simultaneously, making Resilio incredibly fast and eliminating single points of failure. It also enables you to sync in any direction, such as one-way, two-way, one-to-many, many-to-one, and N-way.

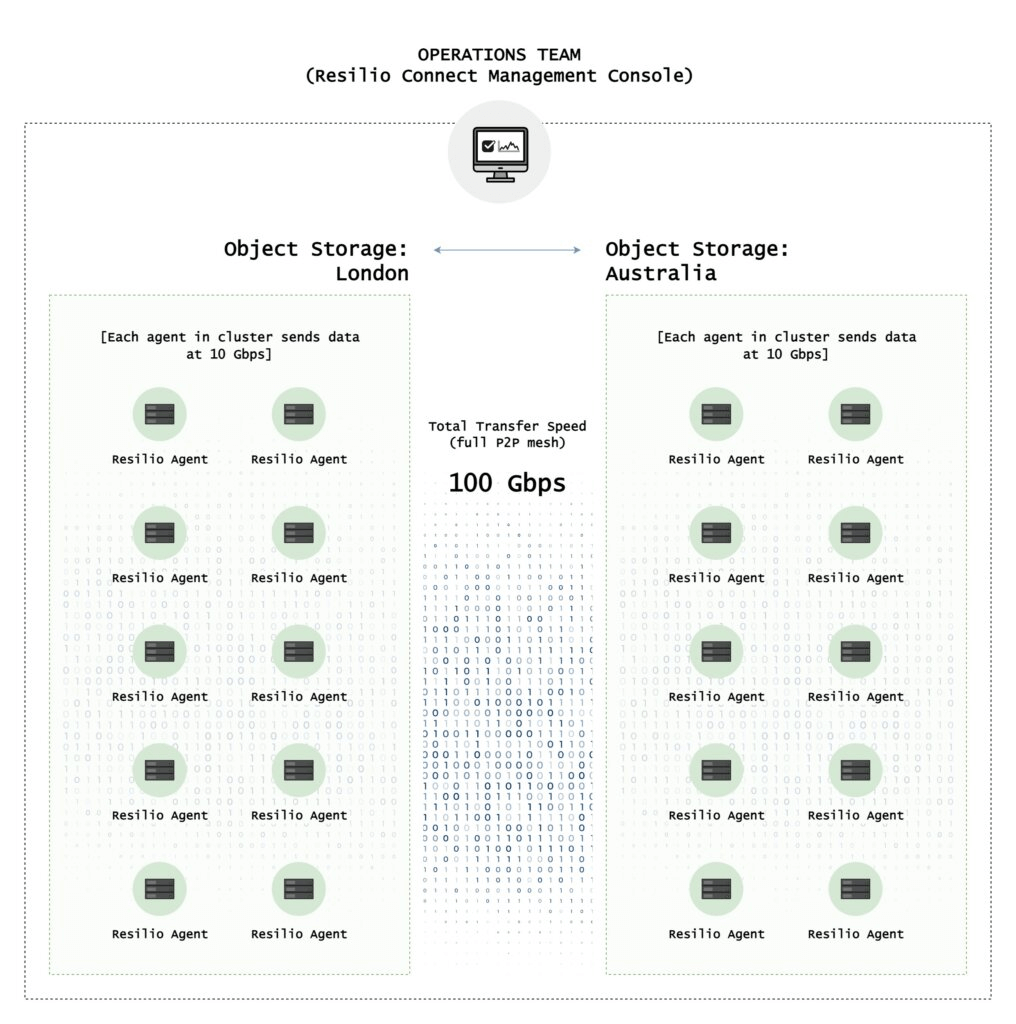

- Organic scalability: Resilio’s P2P architecture provides organic scalability. Adding new endpoints only increases replication speed, allowing Resilio to sync hundreds of endpoints in fixed timeframes. You can also cluster endpoints together to increase speeds linearly and achieve speeds of 100+ Gbps.

- Centralized management: Resilio is an incredibly flexible solution that you can easily install on your existing IT infrastructure. It supports just about any device, operating system, and cloud storage provider. So you can manage and automate replication across your entire environment from Resilio’s Management Console.

- Bulletproof security: Resilio’s bulletproof security features protect your data in vulnerable edge environments.

Organizations in logistics, retail, gaming, media, and more use Resilio to reliably sync and transfer data across hybrid cloud and edge environments. To learn more about how Resilio can enhance your edge applications, schedule a demo with our team.

What Is Edge Data (and Edge Computing)?

Because edge computing is so new, many of the concepts involved aren’t clearly defined. And it can get a bit confusing, as definitions vary from use case to use case. But there are two important aspects of edge computing that you need to understand.

First, edge computing keeps data storage and processing local — i.e., close to the source and/or end-users.

Second, the primary goal of edge computing is to keep data closer to where it’s needed in order to:

- Increase speed and reduce latency: Keeping data processing and analysis at the edge reduces the distance data must travel, thereby increasing the speed of time-sensitive applications.

- Reduce network constraints: As described above, cloud computing can place heavy burdens on a company’s network and centralized servers. By decentralizing data storage and processing (i.e., keeping some data at the edge), businesses can minimize the amount of data transmitted over the network, reduce networking costs, and scale efficiently.

- Avoid/overcome edge networks: Edge networks are usually unreliable and low-quality. By keeping data at the edge, businesses can avoid transmitting over these slow, unreliable networks.

- Increase security: Edge applications can increase security by reducing the amount of data that is transmitted (and vulnerable to interception) and keeping data local, i.e., on devices or in private networks.

For example, imagine a business has multiple HD security cameras monitoring the site 24/7. Naturally, nothing occurs during most of the recordings. But occasionally, the cameras record some type of activity. The business only wants to store the footage where something occurs, and wants to delete the footage where nothing happens (allowing them to minimize storage requirements).

In a classic cloud computing model, the cameras would stream all of the footage to a cloud server. The cloud server would use motion detection to separate and store the footage with activity, and delete the rest of the footage. While saving just the footage with activity helps them reduce storage requirements, this model is still costly and inefficient because:

- Streaming all of those large HD video files puts a constant strain on the building’s network and creates expensive networking costs.

- Processing that much data will strain the cloud server, which can only handle a certain number of cameras before becoming overloaded.

- Streaming that much data over the network will take a long time.

In an edge computing model, the motion detection analysis would occur at the edge — i.e., either on each camera’s internal computer, on an on-premises edge server, or at a local edge data center.

The edge computing model increases efficiency. Only the footage with activity would be streamed up to the cloud server for storage, reducing the strain on the network and the associated costs. And since the cloud server no longer needs to process as much footage, it could service a larger number of cameras — enabling the business to scale their operation while minimizing costs.

Edge Computing Architecture: How Edge Data Is Created and Processed

There are many different kinds of edge devices and edge architectures, so no single definition can fit all use cases. But four of the most common elements of an edge architecture include:

1. Edge Devices and Sensors

Edge data is collected by sensors that are either on edge devices or separate from edge devices (such as in Industrial Internet of Things applications).

Edge devices can include IoT devices, vehicles, desktops, laptops, cameras, industrial machines, medical devices, video game consoles/computers, ships at sea, etc. Basically, any device that collects data at the source or that end-users interact with.

2. Edge Servers and Data Centers

Edge data centers have the same components as traditional data centers but packed into a smaller package. They come in several forms, but are usually:

- Edge servers deployed on-site by a company.

- Edge servers rented by a company at a regional edge data center.

Edge servers and data centers are located close to the source of data or end-users in order to facilitate lower latency and faster response times.

3. Traditional Cloud Computing Infrastructure

Edge computing is a complementary part of the traditional cloud computing infrastructure — which consists of hyperscale data centers and storage and the networks connecting users to them.

In most edge computing scenarios, some of the processing is done locally (on the edge device or data centers) and some is handled in the cloud. Edge computing provides better services to end-users while increasing the efficiency and effectiveness of cloud computing.

4. Replication Solutions

In order to transfer data across edge devices, edge servers, and hybrid cloud endpoints, you need a data replication solution that:

- Transfers quickly: Increasing speed is the main purpose of edge computing. A slow replication solution can become a bottleneck in your application or workflow.

- Optimizes network utilization: Edge applications will have to contend with poor-quality edge networks. Your replication solution must be able to maximize bandwidth utilization on any network to ensure fast transfers from edge to core.

- Scales efficiently: Edge environments can grow quickly. Your replication solution must be able to transfer large amounts of data efficiently, and maintain speed and reliability no matter how large your environment grows.

- Centralizes management: Edge environments can become large and complex. You should be able to manage replication across your entire environment from a centralized interface.

- Keeps data secure: Edge devices are targets for hackers who try to use them to gain access to the rest of your system. Your replication solution must keep your data safe at all times.

Later, we’ll discuss how our file synchronization software system, Resilio, satisfies all of these criteria.

4 Edge Computing Use Cases

To better clarify the concept of edge computing, let’s discuss a few examples that illustrate how edge computing works and the benefits it provides.

1. Remote Work

Remote work is an example of edge computing. Many organizations use hybrid cloud infrastructures that enable employees to work remotely from anywhere in the world, particularly for projects that require employees to operate in the field.

For example, imagine a construction company with projects in different parts of the US. They need to coordinate their projects between employees at the construction site and teams at their offices.

In this scenario, the company’s IT infrastructure may consist of an on-premise server that syncs with a regional cloud server. Employees at the construction site may be using tablets, laptops, or other devices to run design applications that facilitate their work on construction projects. That data then syncs to the regional cloud server and back to the company’s headquarters.

In this case, the devices that the remote employees use are edge devices. They use those devices to collect edge data (i.e., measurements, images, and other info about the construction project) and perform some necessary data processing (i.e., caching files locally, running a VDI, etc.).

2. Fleets

Many organizations utilize a fleet of vehicles that operate in the field — such as airlines, trucking companies, shipping companies, railways, and more.

All of these vehicles have substantial computing onboard. And they often operate in areas where network connections are low-quality or intermittent. Transferring data back and forth between the vehicles and HQ would take too much time and be too unreliable.

This means that many computing processes must be performed at the edge, on the vehicles themselves. And updating the software on each vehicle may require edge computing as well. Transmitting the updates from HQ to each vehicle over low-quality edge networks may be too slow and unreliable. So the company might transfer the updates to a nearby edge server, which can transfer the updates to each vehicle more effectively.

3. Content Delivery

Content providers are looking to distribute CDNs and servers even more widely on the edge, bringing them closer to end-users across the world. This will enable them to cache content closer to end-users so they can significantly reduce latency and improve the user experience.

Cloud gaming is a prime example of this. Cloud gaming companies want to build edge servers as close to gamers as possible, so they can improve game streams and provide a more seamless gaming experience.

4. Industrial Maintenance and Performance

Manufacturing companies want to use edge computing to improve machine performance and minimize equipment failures. By moving data processing closer to their equipment, they can:

- Monitor machine health with lower latencies.

- Perform real-time data analysis.

- Predict and proactively resolve equipment failures and performance issues.

- Increase output.

How Resilio Enhances Edge Applications

Edge applications require constant data replication between edge devices, edge servers/data centers, and the cloud. Organizations that deploy edge applications need a solution that can quickly transfer data over unreliable edge networks, while also keeping data secure and addressing other issues associated with edge deployments.

Resilio is a real-time file synchronization software system that contains features that make it ideal for workflows at the edge. Resilio is built on a decentralized, peer-to-peer replication architecture that enables it to replicate data at blazing-fast speeds, scale organically, transfer data in any direction, and eliminate single points of failure.

It also uses a proprietary UDP-based WAN acceleration algorithm to fully-utilize any type of network connection and overcome the limits of low-quality edge networks.

Resilio facilitates edge applications by providing:

- Optimization of limited edge network connections.

- Blazing-fast replication speeds.

- Organic scalability.

- Centralized management of complex environments.

- Bulletproof data security.

Optimization of Limited Edge Network Connections

One of the main objectives of edge applications is to keep data local (i.e., on edge devices or local networks) in order to avoid transmitting data over the internet to cloud data centers (or on-prem data centers).

Edge applications try to avoid this because:

- Edge deployments are often located far away from the cloud or on-premise data centers. So transferring data back and forth between cloud or on-prem data centers and the edge takes too long and reduces response times.

- Edge deployments are often located in areas with low-quality or intermittent network connectivity — such as at sea (where satellite connections are limited and drop in and out), in remote areas with underdeveloped network infrastructure, or using consumer-grade networks (i.e., cell, Wi-Fi, etc.). Transmissions over these networks are slow and unreliable.

- Some edge deployments involve transmitting big data (i.e., large files or large numbers of files) over low-quality network connections. This can lead to network congestion, which delays data transmission even more.

But most edge applications only process or store some data at the edge, while still requiring some data to be processed at centralized data centers — so transferring data over edge networks is unavoidable.

Resilio solves this issue and helps edge applications transfer data quickly over any network, regardless of distance or quality, using several key features and capabilities:

Proprietary UDP-Based WAN Acceleration Protocol

Most file transfer solutions use TCP-based protocols (such as FTP) to transfer data over WANs. But TCP protocols aren’t designed to fully utilize the bandwidth of a network or adjust to changing network conditions. And they treat packet loss as a network congestion issue and reduce the data transfer rate in response. Since packet loss occurs frequently over WANs, this is a poor response that unnecessarily reduces transfer speed.

But Resilio uses a proprietary UDP-based WAN acceleration protocol known as Zero Gravity Transport ™ (ZGT), which is designed to fully utilize the bandwidth of any network.

ZGT intelligently analyzes the underlying conditions of a network (such as latency, packet loss, and throughput over time) and adjusts to those conditions dynamically in order to maximize efficiency, speed, and bandwidth utilization.

It accomplishes this by using:

- A congestion control algorithm: ZGT’s algorithm constantly probes the RTT (Round Trip Time) of a network in order to identify and maintain the ideal data packet send rate.

- Interval acknowledgements: ZGT sends acknowledgments for data packets in groups once per RTT, rather than acknowledging each individual packet.

- Delayed retransmission: ZGT retransmits lost packets in groups once per RTT to reduce unnecessary retransmissions.

ZGT provides complete predictability of transfer speeds across any type of connection, irrespective of distance, latency, and packet loss. In fact, our engineers were able to transfer a 1 TB payload across Azure regions in 90 seconds.

Resilio can use TCP to transfer data over the LAN (between local edge devices and from edge devices to your edge data center) while using ZGT to transfer data over the WAN. This reduces the time it takes to transfer data between the cloud and your edge application, enabling you to increase response times even more.

Transfers at the Far Edge

Because ZGT optimizes network utilization, you can use Resilio to transfer over any type of network connection — such as VSAT, cell (3G, 4G, 5G), Wi-Fi, and any IP connection. This makes Resilio ideal for deployments at the far edge of networks, such as at sea or in areas with underdeveloped network infrastructure.

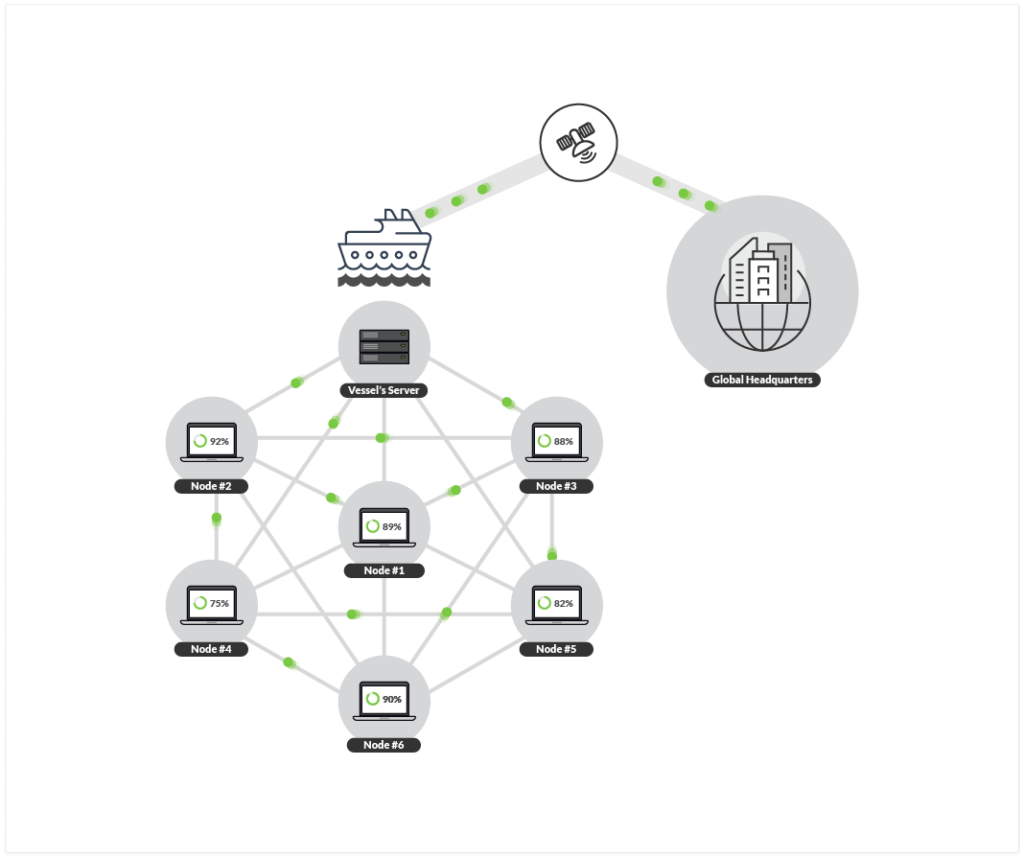

For example: One of our customers is a marine construction company with a fleet of 30 ships operating at sea. Each ship has roughly 20-40 workstations on board that need to be patched and secured with the latest security updates. These updates need to be transmitted over low-bandwidth satellite connections that drop in and out frequently.

They couldn’t reliably deliver updates with their previous solution (Microsoft SCCM with a Distribution Point on every vessel), and mission-critical systems on their ships fell years behind on their security updates.

Resilio helped them overcome this issue using:

- WAN-optimized transfer: With ZGT, they’re able to quickly and reliably deliver updates to each ship’s central server.

- Automation: Using Resilio’s powerful scripting and automation capabilities, they programmed the updates to automatically distribute from the central server to each workstation onboard the ship over the LAN.

You can read more about how they use Resilio to optimize transfers at the far edge here.

Bandwidth Controls

Variations in network bandwidth is another issue for edge applications.

For example, an organization with a remote workforce may have some employees working from an office, some working from home, and some working in the field. The office employees may be working on a reliable, high-bandwidth network connection. But the employees at the edge (i.e., working from home or in the field) may be working on low-bandwidth, consumer-grade connections of varying quality.

This creates an issue when trying to sync data and collaborate with a distributed team. Employees on slow networks will take a long time to download and upload files, which can delay workflows and cause datasets to fall out of sync.

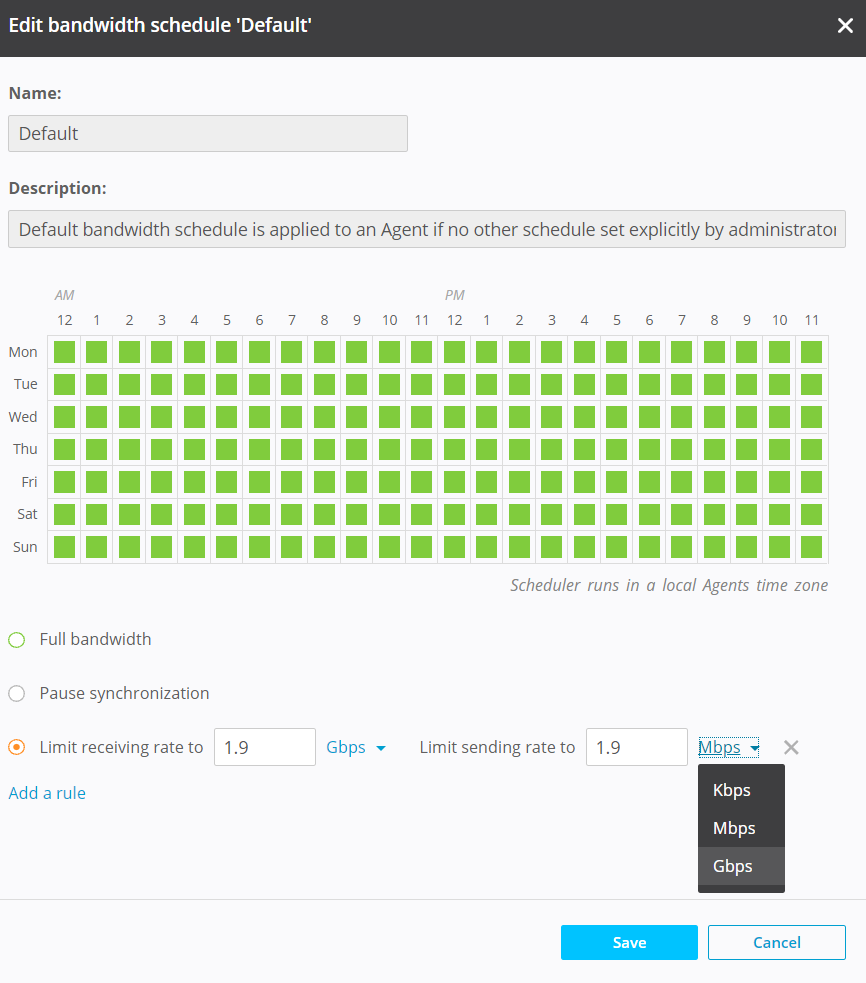

Resilio provides bandwidth controls that can help resolve this issue. From Resilio’s Management Console, you can control bandwidth at each endpoint and even create profiles that govern how much bandwidth each endpoint is allotted at certain times of the day and on certain days of the week.

Skywalker Sound used Resilio to facilitate their transition to a remote work model during the COVID-19 pandemic. But this meant that employees were working on networks of different speeds. To keep their data efficiently synchronized, they used Resilio’s bandwidth controls to manage sync speeds and ensure everyone was working with the same dataset.

You can read more about how Skywalker Sound uses Resilio for fast, secure remote workflows here.

Blazing-Fast Replication Speeds

Edge applications are designed to keep all or some data local (on edge devices and servers) in order to reduce latency and enable faster response times.

One of the main reasons Resilio is so well-suited to edge applications is because it’s designed with this same essence in mind.

Most file transfer solutions are built on a hub-and-spoke replication architecture, which is a centralized replication model. It consists of a hub server and multiple remote servers. The remote servers can’t share data with each other directly. Instead all data transfers must first go through the hub server, which then replicates the data to each remote server one by one.

This kind of centralized replication inhibits speed, as transfers must first go through the hub server (a process known as cloud-hopping) — adding an unnecessary step that delays the synchronization process. It also inhibits scalability, as the hub server can only sync data with one remote server at a time — so syncing a large environment (i.e., large files, large numbers of files, many endpoints) will take a long time.

While Resilio can be configured in a hub-and-spoke architecture, it’s built on a peer-to-peer replication architecture. Resilio’s P2P replication works by installing Resilio agents on every endpoint you want to share and receive data. Every endpoint with a Resilio agent can:

- Share data directly with every other Resilio agent, with no need for cloud-hopping.

- Share data simultaneously, creating a decentralized mesh network where every endpoint can work together to quickly sync data across your environment.

Decentralized, Low-Latency Replication and Sync

In a cloud computing environment, all data must be transferred to the cloud, where all computing processes occur. In edge computing, some or all of the processing occurs locally at an edge device or data center. This enables organizations/applications to increase speed and distribute the storage and computing requirements across an environment.

Similarly, in hub-and-spoke replication, all data must be transferred to the hub-server, which then syncs that data across your environment. But with P2P replication, transfers occur directly from endpoint to endpoint. And all endpoints can work together to sync data.

Like the edge computing model, Resilio’s P2P architecture:

- Reduces latency by delivering data directly to each endpoint and enabling syncs to occur simultaneously.

- Distributes the load across your environment.

For example, imagine you wanted to replicate a file across five endpoints. Resilio can use a process known as file chunking to split the file into multiple chunks that can transfer independently of each other. Endpoint 1 can share the first chunk with Endpoint 2. Endpoint 2 can share that chunk with any other endpoint, even while it waits to receive the remaining files. Soon, every endpoint will be sharing file chunks concurrently, enabling you to sync your environment 3-10x faster than traditional hub-and-spoke solutions.

N-Way Sync and High Availability

As stated earlier, one of the central tenets of edge computing is keeping data close to end-users or the source of data. Keeping data local allows you to:

- Reduce latency, resulting in faster processing and response times.

- Utilize local computing resources for processing in order to decrease the strain on your centralized data centers and network.

In a hub-and-spoke environment, data must first transfer to the cloud before it can be sent to its destination. But with Resilio’s P2P architecture, data can remain local at each endpoint and transfer directly to its destination.

In addition to increasing sync speed, Resilio’s P2P architecture enables it to sync in any direction — such as one-way, two-way, one-to-many, many-to-one, and N-way (i.e., many-to-many).

N-way sync is a particularly powerful capability for edge applications, as it:

- Enables you to keep multiple endpoints (even thousands of endpoints) synchronized simultaneously. In a remote work scenario, an employee located anywhere can make a change to a file and that file change will immediately sync to employees at every other location.

- Enables you to configure Active-Active High Availability scenarios. Resilio effectively turns every endpoint in your environment into a backup site. In the event of a planned or unplanned outage, you can configure failover between any of your nodes. And every endpoint can work together to bring your application back online and increase uptime for your application.

For example: One of our customers is a North American engineering and construction company that builds a variety of extreme engineering projects, such as stadiums, bridges, and power plants. Some of their employees work remotely at construction sites, where they require fast access to mission critical apps and digital assets (such as building plans, CAD applications and drawings, 3D designs, and more).

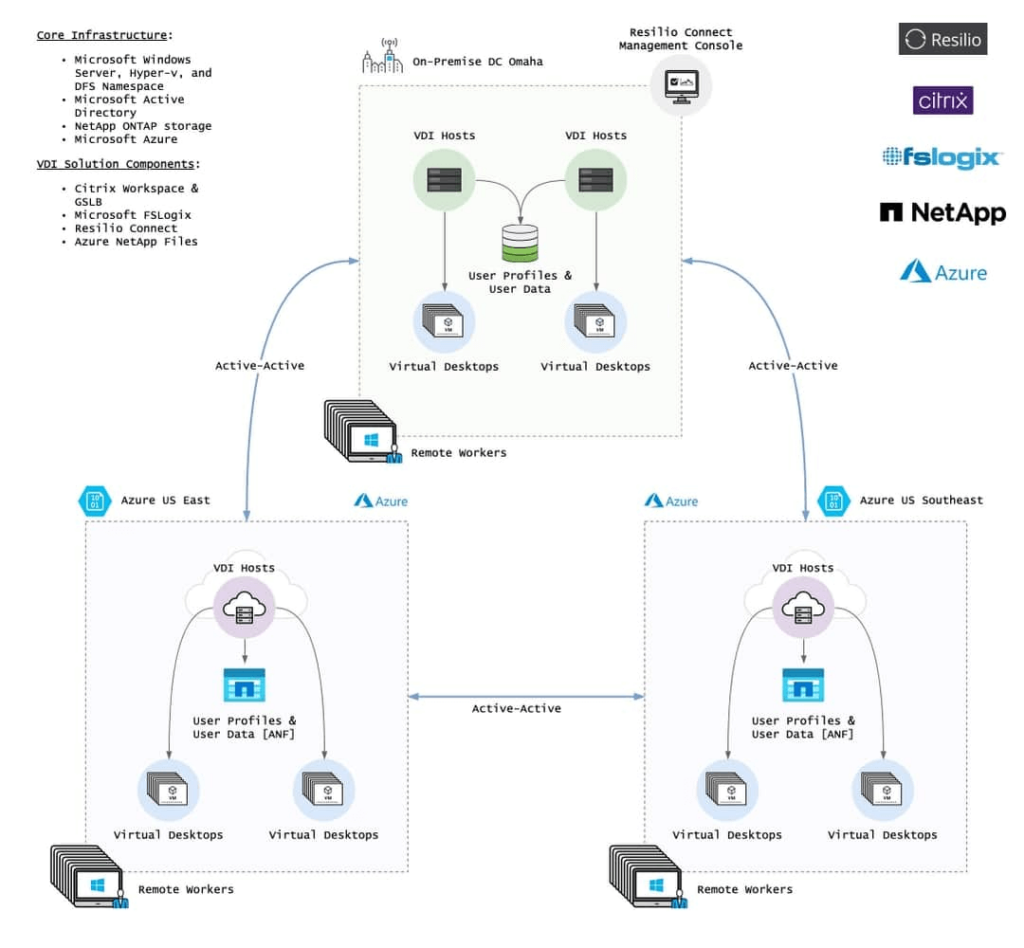

The company provides access to these tools through their VDI (Virtual Desktop Infrastructure) — a multi-site Citrix and Microsoft FSLogix solution for VDI that’s built on Azure NetApp Files, on-premise NetApp storage, and Microsoft Azure cloud storage.

Syncing VDI profile data across all sites took far too long with their previous solutions, resulting in slow login times and recovery. But with Resilio, every endpoint can sync data directly with every other endpoint simultaneously. So when a user logs off, all of their data is replicated and available across all sites within five minutes.

This reduction in latency (i.e., replication time across all sites) has led to:

- Faster login times: With their previous solution, login times took 90 seconds. With Resilio, they’re averaging 15-30 seconds from click to desktop.

- Data locality: They’re able to bring strategic applications and large datasets closer to end-users, enabling fast and seamless workflows.

- Faster recovery: Resilio’s blazing-fast replication enabled them to reach their 5-minute recovery goal for planned and unplanned outages.

According to the project lead at the company:

“With Resilio, we can keep our edge synchronized with our core on-premise data center and 2 cloud locations. Resilio keeps all data updated and replicated. We found no other way to implement an active-active 3-node VDI host cluster across sites.”

You can read more about how they use Resilio to enhance their VDI here.

Organic Scalability

By performing some data processing at the edge rather than at cloud data centers, edge applications reduce the burden on your network and cloud infrastructure. This allows you to scale your operation in a cost-effective way.

As stated earlier, traditional hub-and-spoke replication solutions scale poorly because of their centralized architecture. The hub server can only transfer data to one remote server at a time, so adding more endpoints (or increasing the size of the data) increases the time it takes to sync your environment.

But Resilio’s P2P architecture makes it organically scalable. Since every endpoint can share data simultaneously, adding more endpoints increases replication resources (i.e., bandwidth, CPU, etc.) and speed.

In other words, more demand inherently creates more supply. Resilio can sync 200 endpoints in roughly the same time it takes most hub-and-spoke solutions to sync just two.

And Resilio can sync files of any size, type, and number (our engineers synchronized 450+ million files in a single job).

Resilio also provides a feature known as horizontal scale-out replication, which allows you to cluster endpoints together to pool their resources and increase replication speeds linearly. For example, our engineers were able to cluster Resilio agents together and reach speeds of 100+ Gbps.

This is incredibly useful in edge applications because every new edge device you add to your environment increases replication speed and resources. And you can cluster devices together to dramatically increase replication speeds.

The North American engineering client we mentioned earlier used scale-out replication to boost site-to-site replication performance by 300% — from 2.5 Gbps to 8 Gbps.

Centralized Management of Complex Environments

Since edge applications must be close to the data sources/end-users, edge infrastructures can quickly grow in:

- Complexity: Large edge applications create many separate systems in different locations that must be managed by large, experienced IT teams.

- Vulnerability: Since edge applications are particularly vulnerable to hacking and malicious actors, you’ll need to invest in more security.

- Costs: As your edge application grows, you’ll need to invest in physical infrastructure (servers, devices, etc.) and networks to ensure low-latency data transfers and fast application performance.

We’ll discuss how Resilio’s bulletproof security features address the issue of vulnerability in the next section. And we’ve already discussed how Resilio’s P2P architecture and WAN acceleration protocol ensure fast application performance over any network and organic scalability — which can help reduce costs.

But Resilio’s centralized management and automation capabilities help control costs and make complex edge applications easier to manage.

Easy, Flexible Installation

Resilio is an incredibly versatile solution that you can seamlessly integrate into your existing IT infrastructure and workflow. You can install Resilio agents on just about any:

- Device: Resilio supports IoT devices (such as security cameras, drones, etc.), edge servers, file servers, desktops, laptops, NAS/DAS/SAN devices, mobile devices (Resilio offers iOS and Android apps), and virtual machines (such as Citrix, VMware, and Microsoft Hyper-V).

- Cloud storage providers: Resilio supports just about any S3-compatible cloud object storage, such as AWS S3, Azure Blobs, Google Cloud Platform, Wasabi, NetApp, WekaIO, Backblaze, MinIO, and more.

- Operating systems: Resilio supports Windows, MacOS, Linux, Unix, FreeBSD, Open BSD, Ubuntu, and more.

- Workflow solution: You can use Resilio’s REST API to integrate it with just about any solution your team is already using, such as management tools (LCE, Microsoft SCOM, Splunk, etc.), engineering tools (AutoCAD, Revit, CSI Sap2000, etc.), media tools (DaVinci Resolve, Adobe Premier, Avid Media Composer, etc.), development tools (Jenkins, TeamCity, etc.), and more.

This level of flexibility means that, no matter what devices you require, you can use Resilio to transfer and sync data across your entire edge application.

Centralized Management

You can manage your entire replication environment from Resilio’s Management Console — a user-friendly graphic-interface that can be accessed from any web browser.

Through the Management Console, you can obtain granular control over every aspect of replication and perform functions such as:

- Creating, managing, and monitoring replication jobs.

- Controlling which files and folders sync to which specific endpoints.

- Reviewing a history of all executed jobs. For example, you can configure some data to sync from edge devices to edge servers, some to sync from edge devices to the cloud, etc.

- Creating automation policies that govern how data is synchronized, cached, purged, and downloaded. For example, you can configure Resilio to automatically purge files from a cache after a certain number of days.

- Adjust replication parameters, such as disk I/O, data hashing, file priorities, and more.

- Automate troubleshooting processes, such as file conflict resolution.

- As stated earlier, you can adjust bandwidth at each endpoint manually and create bandwidth profiles.

Automation

You can use Resilio’s REST API to script any type of automation your jobs require. Resilio provides three types of automation triggers:

- Before a job starts

- After a job completes

- After all jobs complete

Northern Marine Group uses Resilio’s automation and management capabilities to distribute updates and monitor the health of their ships at sea. Not only can their IT team manage and monitor the entire process remotely, but Resilio enabled them to reduce the time it takes to bring their fleet into compliance by 92% compared to their previous solution (burning the updates onto a CD and sending it in the mail).

According to their Head of IT, Paul Clark:

“Being able to use the scripting engine meant that we could essentially have the updates distribute, install and report back on status all automatically, allowing us to avoid the installation going wrong because of user error. For a few of the ships where we did have issues, all we had to do was create some modified scripts and distribute those using Resilio as well.”

You can read more about how Resilio helps Northern Marine Group distribute updates at the edge here.

Bulletproof Data Security

Edge environments create additional security risks. Your environment becomes larger and more disparate, making it harder to protect. And edge networks are less secure, and therefore are more vulnerable to attack from malicious actors. Hackers often try to gain access to edge devices in order to gain access to the rest of your system.

Resilio protects sensitive data via security features that were reviewed by 3rd-party security experts (TPN Blu certified and SOC2 certified), such as:

- End-to-end encryption: Edge applications often involve lots of data transfers, particularly between edge devices and edge data centers. Resilio uses AES 256-bit encryption to encrypt data in transit and at rest.

- Cryptographic data integrity validation: Hackers will often try to intercept files and insert malicious material used to gain access to your system. Resilio uses data integrity validation to ensure data arrives at its destination intact and uncorrupted. Damaged or corrupted files are immediately discarded and scheduled for retransmission.

- Immutable copies: Resilio stores immutable copies of data in the cloud to protect against ransomware.

- Mutual authentication: Hackers will often try to spoof edge devices in order to gain access to the rest of your network. But Resilio requires every endpoint to provide an authentication key before it can receive files, ensuring your data is only delivered to authenticated endpoints.

- Permission controls: You can limit access to certain data to privileged employees by controlling who is allowed to access specific files and folders.

- Forward secrecy: Resilio protects each session with one-time session encryption keys.

- Proxy server: Resilio’s Proxy Server capabilities enable you to support advanced firewall configurations, limit who can access certain files and folders on your devices, and prevent unapproved scripts from running on your devices.

Use Resilio for Edge Data Sync and Transfer

Resilio is not just a superior file sync software system that’s design enhances edge computing goals better than any file sync and transfer solution, it also has:

- Network optimization: Resilio uses a proprietary WAN acceleration protocol known as ZGT that optimizes transfers over any network, regardless of quality, distance, or latency. ZGT enables Resilio to work over any type of network connection, such as VSAT, cell, Wi-Fi, and any IP connection — so you can quickly sync and transfer data from edge (and far edge) to core.

- P2P sync architecture: Unlike hub-and-spoke solutions, Resilio’s P2P sync architecture avoids cloud-hopping and enables direct, low-latency sync between endpoints. Every endpoint can work together to sync your environment 3-10x faster than traditional solutions. And you can sync in any direction, such as one-way, two-way, one-to-many, many-to-one, and N-way sync — which enables you to keep thousands of endpoints synchronized simultaneously.

- Organic scalability: Resilio’s P2P architecture makes it organically scalable, as every device in your environment can share files simultaneously. Adding new endpoints only increases replication resources and speed. Resilio can also perform horizontal scale-out replication — which clusters endpoints to increase speeds linearly — to reach speeds of 100+ Gbps.

- Centralized management and automation: Resilio is a flexible solution that you can deploy on your existing IT infrastructure with minimal operational interruption. It supports just about any device, operating system, cloud service provider, and workflow solution. You can manage your entire environment from Resilio’s Management Console. And you can use Resilio’s REST API to script any type of functionality your jobs require.

- Bulletproof security: Resilio contains bulletproof security features that protect your data in vulnerable edge environments — such as AES 256-bit encryption, cryptographic data integrity validation, mutual authentication, and more.

Organizations in logistics, retail, gaming, media, and more use Resilio to reliably sync and transfer data across hybrid cloud and edge environments. To learn more about how Resilio can enhance your edge applications, schedule a demo with our team.